Okay people, the time has come. It's time for me to rant about AI.

First things first, none of the content on this site is generated by AI. I mean, to be honest, sometimes - all too often - I basically come here to rant. And asking an AI to rant on my behalf really wouldn't be the same! But actually, neither do I use it for any of my projects, or my research. Sorry, AI fans. It's not my thing.

Maybe that's just me, maybe I'm just a maverick - because it feels like almost everyone has been caught up in the insane hype of generative AI recently. I'm hearing nothing but a constant barrage of marketing from commercial entities and news outlets alike saying it's going to change our jobs and our lives and make us all more 'efficient' and 'productive' - before totally replacing us altogether, of course.

So much hype has the leading generative AI tool - ChatGPT - generated, that everyone wants their share of the cake. As such, many websites and apps have now integrated their own AI functions, using either ChatGPT directly, or some similar software. One such website is the question and answer site Quora, which I was unfortunate enough to visit today (it's a mixed bag, let me tell you). Now, in a bid I'm sure to 'improve the quality of the content' on their site, and make it more 'efficient' and 'productive' for their users, they have added a feature which gives ChatGPT's response to each question that has been asked on the site, alongside the existing human user responses.

I was searching for typical values of a parameter called 'forward recovery time', of diodes. You don't really have to understand what forward recovery is to understand my issue here though. Diodes - which are a common electronic component for those unaware (I'm catering to all audiences with this post) - have many properties, and parameters, that might be given to you by the manufacturer. Engineers need to know these parameters so they can use that device in a circuit they build and get predictable results. One of these parameters, which is fairly well known by engineers, is called 'reverse recovery time'. Another slightly lesser known property is the 'forward recovery time' which I just mentioned.

Now reverse recovery time presents a notable issue which many engineers need to consider in their designs, as it affects circuit dynamics and causes power losses. It is often specified in device datasheets. But forward recovery time is less well known. It causes fewer problems, and usually doesn't need to be considered by engineers in most cases, and as such, many device manufacturers don't even seem to specify it in their datasheets. I, however, had a case where I needed to consider it, because something I built failed (not an uncommon occurrence in experimental power electronics) and I had a suspicion it was possible that forward recovery had contributed.

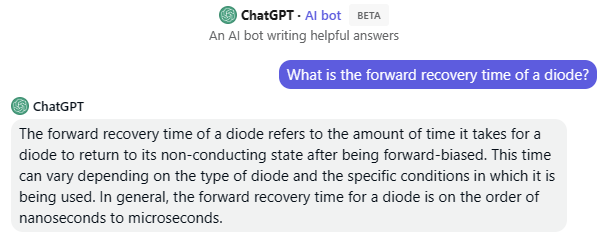

I needed to find out if this was really a plausible cause of the failure, so I was searching for typical values of forward recovery time - which would be on the order of nanoseconds - to evaluate the possibility. And I clicked on that Quora page, because the Google search preview showed that one of the human answers had mentioned typical values of the parameter. But at the top of the page, before the human-generated answers, was a preview of ChatGPT's answer to the question, "What is the forward recovery time of a diode?", and I couldn't help but click on it.

ChatGPT's response was fascinating, authoritative, and completely wrong. ChatGPT had answered "What is the reverse recovery time of a diode?" without even knowing it. The first sentence of its answer confirms it thinks it's answering the right question, and it repeats 'forward' later on as well, but the definition exactly matches that of reverse recovery time, not forward recovery time. To anyone in doubt, forward recovery time is the time between a forward voltage being applied to a reverse biased diode, and when the diode conducts in the forward direction. Reverse recovery is the time in which a diode won't block reverse voltages when they are applied, after it has been conducting in the forward direction. ChatGPT got it completely wrong.

I suspect the reason this has happened is that reverse recovery is a much more common topic than forward recovery, however the word 'forward' occurs commonly in texts describing reverse recovery, because it is integral to describing reverse recovery. Without claiming to have any expert knowledge on generative AI - it's not my thing - this appears to prove the notion that it generates output based on statistics and the correlation of words in its input data, without really understanding meaning. ChatGPT gets its information from the internet, or at least 2021's internet. Because of the nature of these two parameters, as I described above, there are far more texts on the internet describing reverse recovery with the word 'forward' somewhere in them than there are texts actually describing forward recovery. I suspect that this difference in frequency of occurrence leads ChatGPT to paraphrase the common definition of reverse recovery when asked about forward recovery.

I already knew both definitions before I saw ChatGPT's answer - I was looking for typical values, not the definition, and only saw it incidentally. So I knew it was wrong. But not everyone does! I think you can probably see how this experience reflects something very general and very concerning about AI: if we begin to trust it to give us correct answers - which many people will in time, and especially with all this hype we are being exposed to - then we will believe what it says and we will be led astray when it gets things wrong. In this case, I had to be an engineer to know that ChatGPT was wrong. And yet, the next generation of engineers will be born and bred on generative AI's answers, and they won't know. And if we aren't careful, sources like ChatGPT and other generative AI will become more ubiquitous and 'trusted' than any single human entity. ChatGPT is already at the top of Quora's pages after all! And of course we'd be tempted to believe they're reliable: they can very quickly sum up vast amounts of content from different sources, and produce a single, coherent answer - they can do that in a way no human can. And they can bloody well get it wrong.

To ChatGPT, forward recovery and reverse recovery are basically the same thing. In reality, they are totally different. And when you're working in the real world, like some of us still do, and you're building circuits, this stuff matters. You just can't do engineering with a tool that can gloss over minor differences like this. Those minor differences are crucial. They're what engineering is all about. So in terms of ChatGPT coming for my job? I'm not that scared yet.

But in one way, at least, I'm in agreement with the AI proponents. It's not going to be a fad, or a flop, like some people perhaps think. It will change everything. We're about to enter a new age - the efficient, productive age where forward recovery means reverse recovery. Don't argue. No, please. Pull yourself together - stop believing in this forward recovery nonsense. The AI overlords have cleared up all of our confusion.

But guess what powers the huge machine learning servers that are required to train and run AI models?

Power electronics. Probably with diodes somewhere. And you damn well bet they have forward recovery times.

None yet!

Display Name

Email (optional, not displayed)

Website (optional, displayed, include "https://")

Comment (up to 1000 characters)